Kubernetes Architecture and core Components –

What is Kubernetes?

Kubernetes is a container orchestrator system that helps automate application deployment, scaling, and management. It groups containers into logical units, making them easier to manage. Kubernetes provides features like auto-scaling, load balancing, self-healing, and service discovery. Originally built by Google, it is currently maintained by the CNCF.

What is Kubernetes architecture?

Kubernetes is a popular open-source platform for management and deployment of containerized applications. It was originally developed by Google and now maintained by the Cloud Native Computing Foundation. Kubernetes provides a way to automate the deployment, scaling, and management of containerized applications, allowing developers to focus on writing code instead of worrying about the underlying infrastructure.

Read Also – Pipeline integration with jenkinsfile

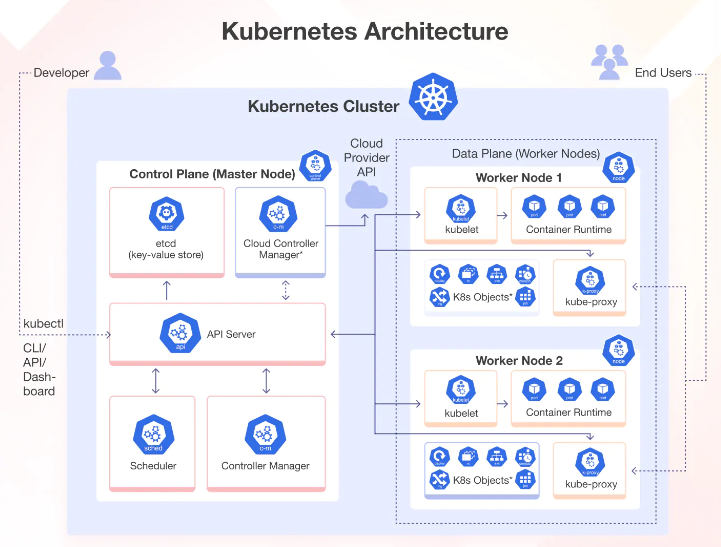

Kubernetes features a highly modular architecture that allows it to be flexible and scalable. At a high level, it is composed of several components, including the Kubernetes master, API server, etc., scheduler, controller manager, kubelets, and Kubernetes networking components. These components work together to provide a platform for management and deployment of containerized applications in a K8s cluster.

Kubernetes architecture components

Kubernetes architecture follows a master–worker model, where the master, known as the handel plane, manages the worker nodes. Containers (encapsulated in pods), on the other hand, are deployed and executed on worker nodes. These nodes can be virtual machines (on-premises or in the cloud) or physical servers.

Let’s break down the important components of this architecture:

control plane

The control plane is responsible for container orchestration and maintaining the state of the cluster.

Kubernetes control plane components

The Kubernetes control plane consists of several components, each responsible for a specific task (as described below). These components work together to ensure that the state of each Kubernetes cluster matches a pre-defined desired state.

Kube-episerver

Kube-API helps server users and other components to easily communicate with the cluster. Some monitoring systems and third-party services may use it to interact with the cluster. When using a CLI such as kubectl to manage a cluster, you use the HTTP REST API to talk to the API server.

However, internal cluster components (such as the scheduler and controller), use gRPC for this communication.

The API server encrypts its communications with other components via TLS to ensure security. Its primary functions are to manage API requests, validate data for API objects, authenticate and authorize users, and coordinate processes between the control plane and worker node components.

The API Server works only with Adi and includes a built-in Bastion Episerver proxy, which enables external access to ClusterIP services.

ETCD

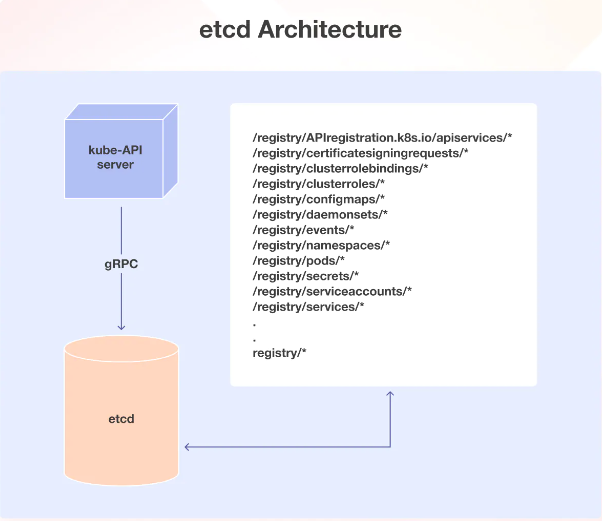

ETCD is a distributed key-value store used by Kubernetes to store configuration data for the cluster. It is a highly available and consistent data store that provides various components of Kubernetes a reliable way to store and retrieve data about the cluster.

Kubernetes uses etcd to manage its key-value API through (gRPC). All objects are stored under the /registry directory key in key-value format.

The api-server of Kubernetes uses the etcd’s watch feature to monitor any modifications to an object’s state. As the sole Statefulset component in the control plane, etcd is an excellent database for Kubernetes.

Kube-scheduler

When deploying a pod in a Kubernetes cluster, the kube-scheduler identifies the best worker node that satisfies the pod requirements, such as CPU, memory, and affinity. Upon identification, it schedules the pod on the right node.

This process is made possible because of etcd’s role in storing vital information needed by Kubernetes to run smoothly. The necessary information is stored in the etcd file cabinet whenever a request is made to Kubernetes.

Kubernetes schedules a pod using several techniques.

First, it filters through all available nodes to find the best ones for the pod. Then, it assigns each node a score based on scheduling plugins. The scheduler selects the best node and binds the pod to it. This process ensures that high-priority pods get the priority they deserve and that custom plugins can be easily added to the mix. It’s an innovative and efficient way to manage Kubernetes pods.

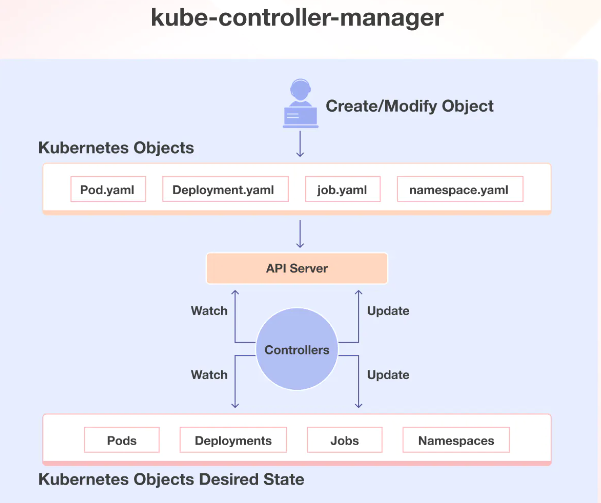

Kube-controller-manager

The kube-controller-manager handles different controllers that help create replicas of containers and ensure the cluster stays in the desired state.

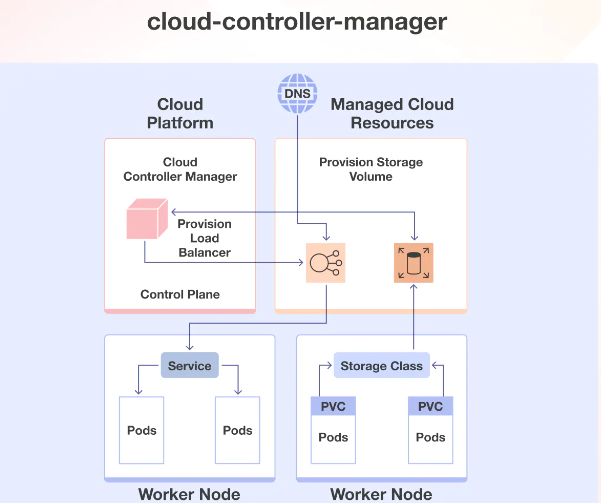

Cloud-controller-manager (CCM)

When deploying Kubernetes in cloud environments, it’s essential to bridge Cloud Platform APIs and the Kubernetes cluster. This can be done using the cloud controller manager, which allows the core Kubernetes components to work independently and enables cloud providers to integrate with Kubernetes using plugins.

If you are working with AWS, Cloud Controller Manager will be helpful. It acts as an intermediary between the Kubernetes control plane and AWS APIs. It offers additional functionality and integration with AWS services, such as EC2 instances, elastic load balancers (ELBs), and Elastic Block Store (EBS) volumes.

Worker nodes

Worker nodes are critical components in the Kubernetes architecture as they help run containerized applications.

Kubernetes worker node components

Worker nodes are the primary execution units in a Kubernetes cluster where real workloads run. Each worker node can host multiple pods, each of which will have one or more containers running inside it. Each worker node contains three components responsible for scheduling and managing these pods:

Kubelet

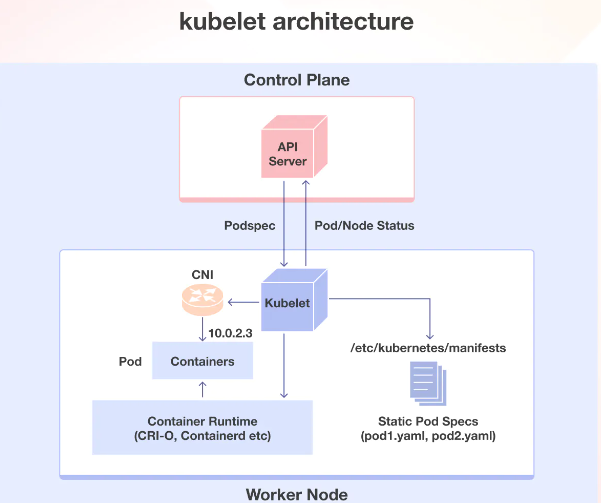

A kubelet is an essential component that runs on every node in a Kubernetes cluster. It acts as the agent responsible for registering worker nodes with the API server and primarily for working with podspecs from the API server.

Creates, modifies, and deletes containers for a kubelet pod. Additionally, it handles liveness, readiness, and startup checks. It also scales volumes by reading the pod configuration and creating corresponding directories that report node pod status through calls to the API server.

kube-proxy communicates with the API server to obtain details about services and their associated pod IPs and ports. It monitors service changes and endpoints and then uses a variety of methods to create or update rules to route traffic to the pods behind the service.

Modes include IPTables, IPVS, Userspace, and Kernelspace. When using IPTables mode, kube-proxy handles traffic with IPtable rules and randomly selects backend pods for load balancing.

Container runtime

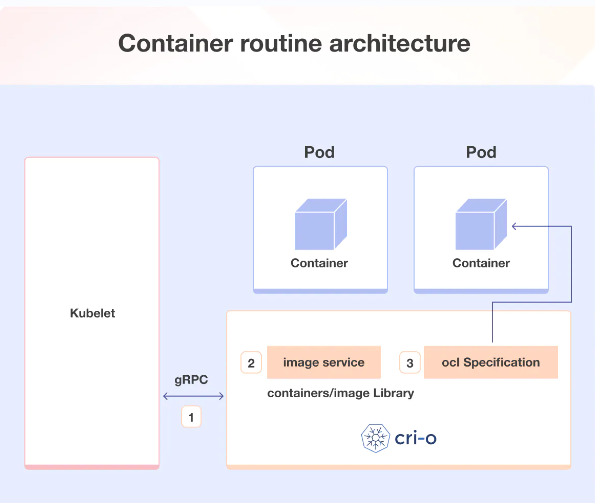

Just as Java Runtime (JRE) is required to run Java programs, Container Runtime is required to run containers. The container runtime is responsible for various tasks, such as pulling images from container registries, allocating and separating resources for containers, and managing the entire lifecycle of containers on a host.

Kubernetes interacts with the container runtime through the Container Runtime Interface (CRI), which defines APIs for creating, starting, stopping, and deleting containers, and managing images and the container network.

The Open Container Initiative (OCI) is a set of standards for container formats and run times. Kubernetes supports multiple container runtimes compatible with CRI, such as CRI-O, Docker Engine, and Containers.

The Kubelet agent communicates with the container runtime using the CRI API to manage the container’s lifecycle and provides all container information to the control plane.

Kubernetes Cluster Addon Components

To guarantee the full functionality of your Kubernetes cluster, complementary add-on components must be included with the primary components. The choice of add-on components largely depends on your project requirements and use cases.

Some popular addon components you will need on a cluster include the CNI plugin for networking, CoreDNS for DNS servers, Metrics Server for resource metrics, and the Web UI for managing objects via the Web UI.

By enabling this add-on, you can greatly improve the efficiency and performance of your Kubernetes cluster.

CNI plugin

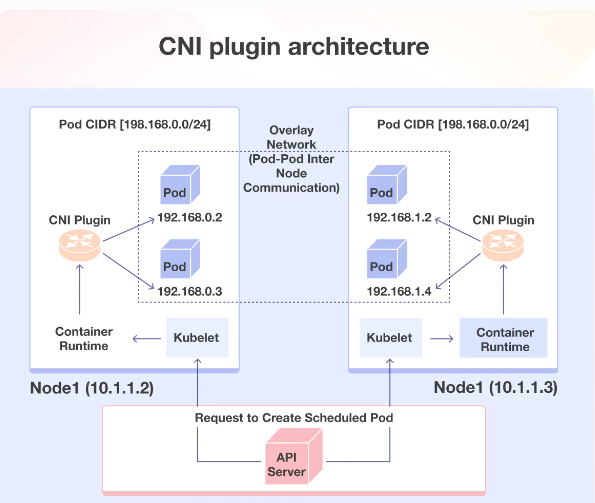

The Container Networking Interface (CNI) is a way to create network connections for containers, and it works with many different orchestration tools, not just Kubernetes. Organizations have different needs when it comes to container networking, such as security and isolation.

Many types of companies have created solutions for these needs using CNI. These solutions are called CNI plugins and you can choose one according to your needs.

This is how things work when using CNI plugins with Kubernetes: Each pod (a container or group of containers) gets a unique IP address. Then, the CNI plugin connects the pods together, no matter where they are located. This means that pods can communicate with each other, even if they are on different nodes.

Many different CNI plugins exist, including popular ones like Calico, Flannel, and Weave Net. It is important to select the right one for your specific needs. Container networking is a big responsibility, but CNI plugins make it easy to manage.

Tag – Kubernetes Architecture and core Components Kubernetes Architecture and core Components

Hope you like this blog….

- Deployment of Spring Boot Application on Kubernetes Using Helm - July 19, 2024

- Robot Framework for Selenium Automation - July 14, 2024

- Pytest Framework (Selenium Automation) - July 14, 2024