Understanding Kubernetes –

What is Kubernetes?

Understanding Kubernetes- is an extensible, portable, and open-source platform designed by Google in 2014. It is also designed for managing the services of containerized apps using different methods which provide the scalability, predictaIt is primarily used to automate the deployment, scaling, and operation of container-based applications across a cluster of nodes.bility, and high availability.

Read Also-Docker

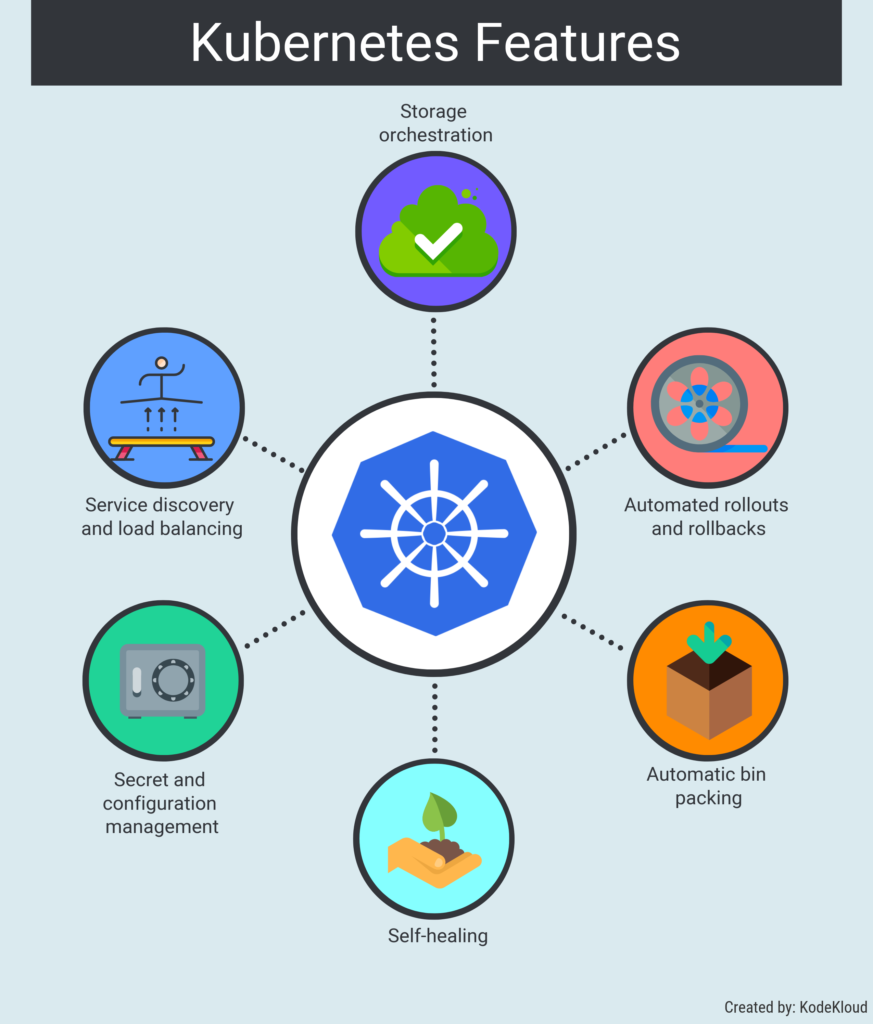

Feature of kubernetes:

1)Horizontal Scaling:

This feature uses a HorizontalPodAutoscalar to automatically increase or decrease the number of pods in replication controller,a deployment, replica set, or stateful set on the basis of observed CPU utilization.

2)Service Discovery and load balancing:

Kubernetes assigns a set of IP addresses and DNS names to a set of containers, and also balances the load across them.

3)Self-Healing:

Kubernetes automatically restarts containers that fail during the execution process. And, for containers that do not respond to user-defined health checks, it automatically stops them from working.

4)Persistent Storage:

‘Persistent storage’ used for storing the data, which cannot be lost after the pod is killed or rescheduled. Kubernetes supports various storage systems for storing thedata, such as Google Compute Engine’s Persistent Disks (GCE PD) or Amazon Elastic Block Storage .

5)Automated rollouts and rollbacks:

Using rollout, Kubernetes distributes changes and updates to an application or its configuration. If any problem occurs in the system, then this technique distributes the changes and updates to an application or its configuration. If any problem occurs in the system, this technology immediately rolls back those changes for you.

6)Pod:

It is a deployment unit in Kubernetes with a single Internet Protocol address.

7)Automatic Bin Packing:

Kubernetes helps the user to declare the maximum and minimum resources of computers for their contkubernet

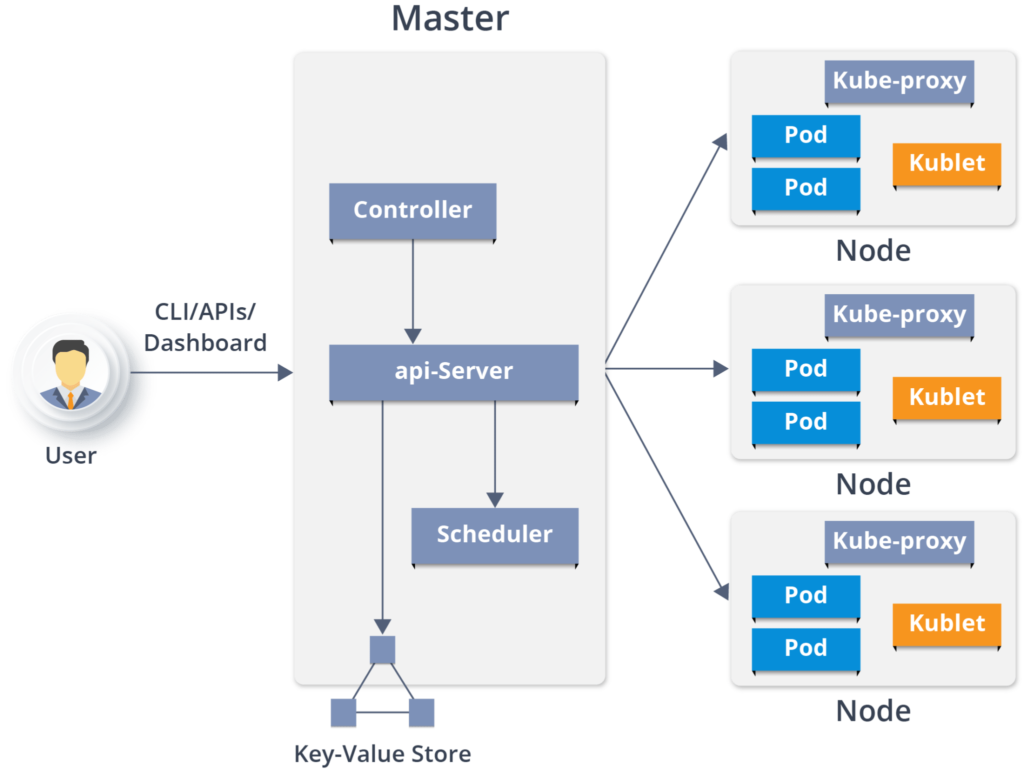

Kubernetes Architecture:

Cloud-controller-manager:

cloud-controller-manager runs as a replicated set of processes in the control plane (typically, these will be containers in pods). Cloud-controller-manager is what allows you to connect your cluster to the cloud provider’s API and run controllers specific to only the cloud provider you are using.

The Control plane (master):

The control plane is composed of kube-api server, kube scheduler, cloud-controller-manager, and kube-controller-manager. Kube proxies and kubelets reside on each node, talking to the API and managing each node’s workload. Since the control plane handles most of Kubernetes’ decision making, the nodes running these components typically do not have any user containers running – these are typically designated as master nodes.

Kubelet:

Kubelet acts as an agent within nodes and is responsible for running pod cycles within each node. Its functionality is watching for new or changed pod specifications from master nodes and ensuring that the pods within the node it resides in are healthy, and that the state of the pod matches the pod specification.

Kube-API Server:

The Kube-API Server validates and configures data for API objects, including components, pods, and services within the control plane. The API provides a frontend to the shared state of the server cluster, where all other components interact. This means that any access and authentication such as deployment of pods and other Kubernetes API objects via kubectl is handled by this API server.

Node:

Kubernetes runs workloads by placing your pods into nodes. Depending on your environment, a node can represent a virtual or physical machine. Each node will contain the components necessary to run pods. There are two different classifications of a node within Kubernetes: master and worker nodes

Kube-scheduler:

Running as part of the control plane, the responsibility of the kube-scheduler is to allocate pods to nodes within your cluster. The scheduler will use information such as requests and limits (if any) defined within your workload, as well as find the right node candidate based on your available resources to appropriately allocate your workload on a particular node.

Hope you like this blog….

- AnchorSetup using Docker-Compose - October 18, 2024

- Devops assignment - October 17, 2024

- Deployment of vault HA using MySQL - September 18, 2024